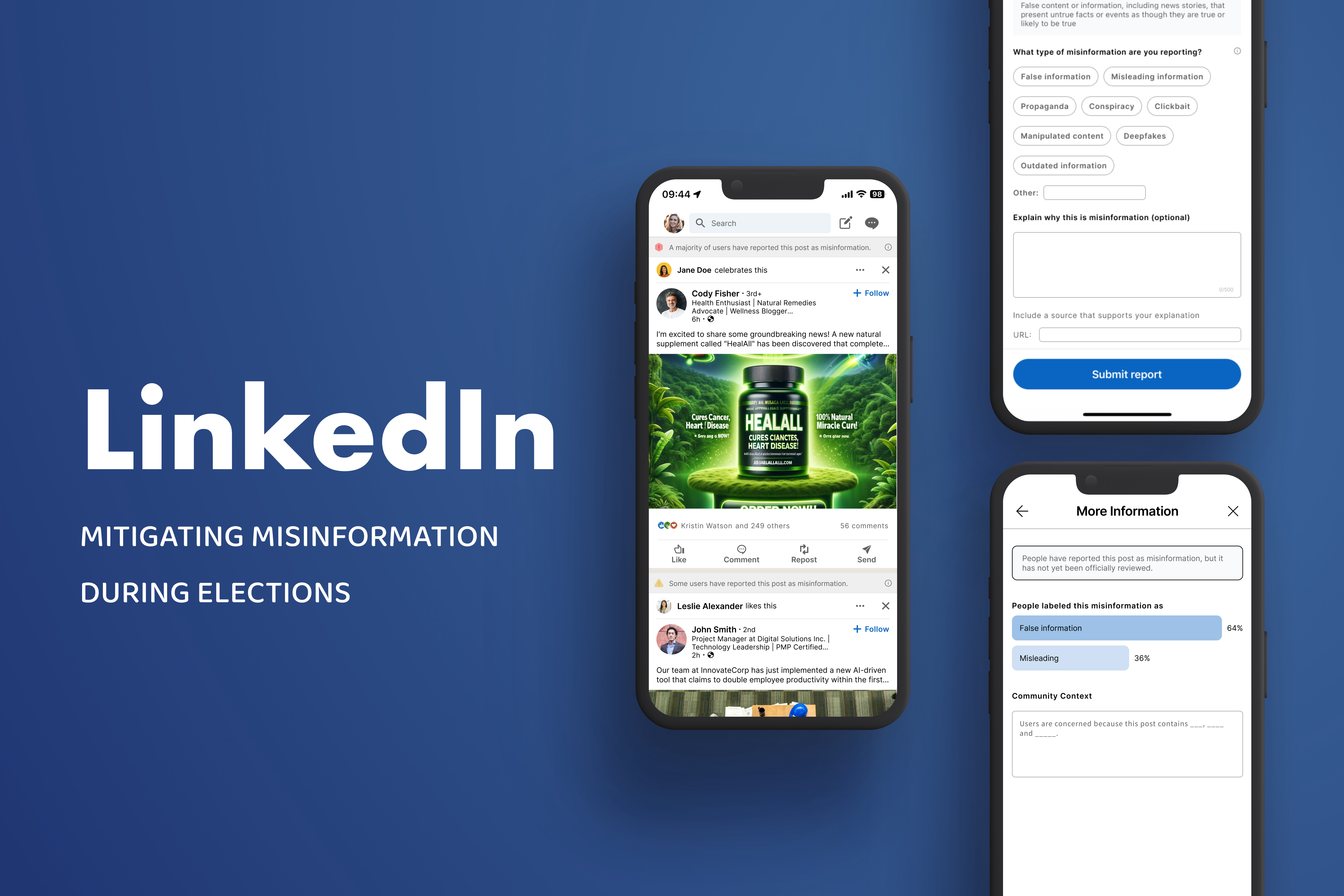

Understanding User Trust and Misinformation Reporting on LinkedIn

Research Abstract

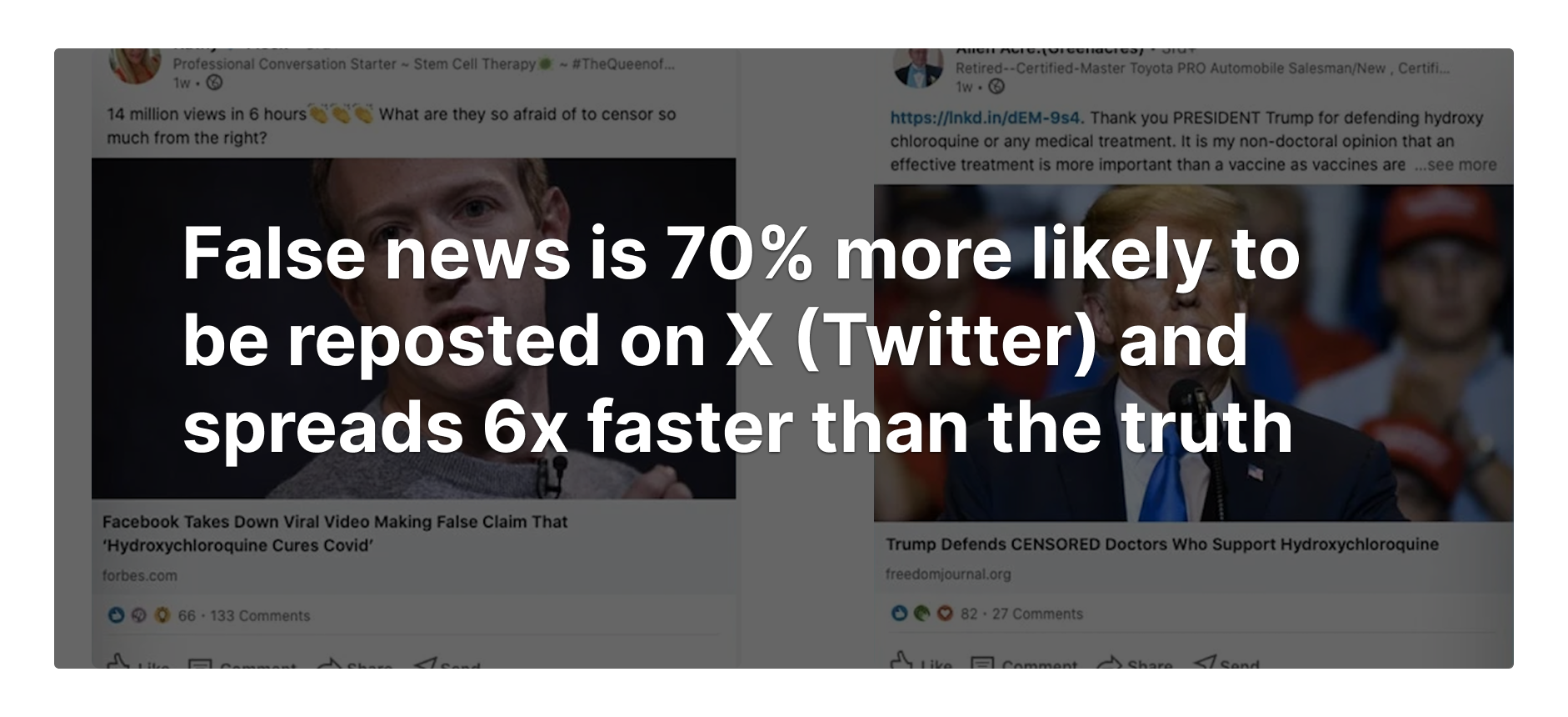

As misinformation spreads faster than the truth, trust on platforms like LinkedIn is increasingly fragile. Through mixed-methods research with 200+ LinkedIn users, this study identifies where users struggle most, and where the product can realistically intervene.

Role

UX Researcher

Timeline

3 Months

Company

LinkedIn (Research Project)

Research Ownership

- • Designed and executed a mixed-methods study using survey and interview data.

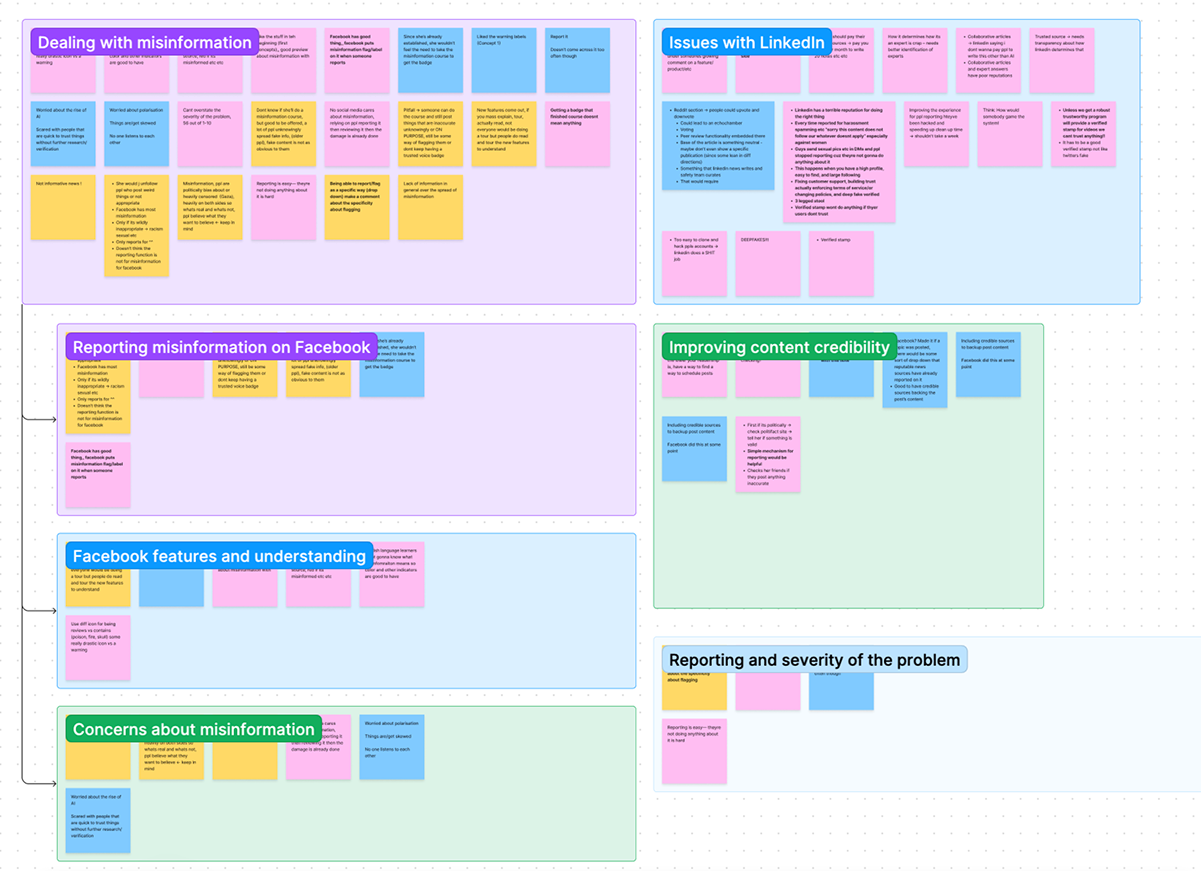

- • Led qualitative interviews to explore reporting behaviors and perceived misinformation markers.

- • Synthesized behavioral findings into 3 actionable design concepts.

- • Partnered with engineering to validate research implications against real-time technical constraints.

- • Produced a strategic white paper connecting research insights to long-term user trust and safety planning.

Imagine you are scrolling on LinkedIn....

Research Context

The 23-Minute Gap

Currently, LinkedIn requires approximately 23 minutes to process a reported post. Within this window, the reportedcontent remains fully visible and viral.

With over 60 countries holding elections in 2024, political debate could further escalate the spread of false information, potentially misleading thousands.

Research Question

“How might we address the spread of misinformation during the 23-minute moderation window to maintain user trust?”

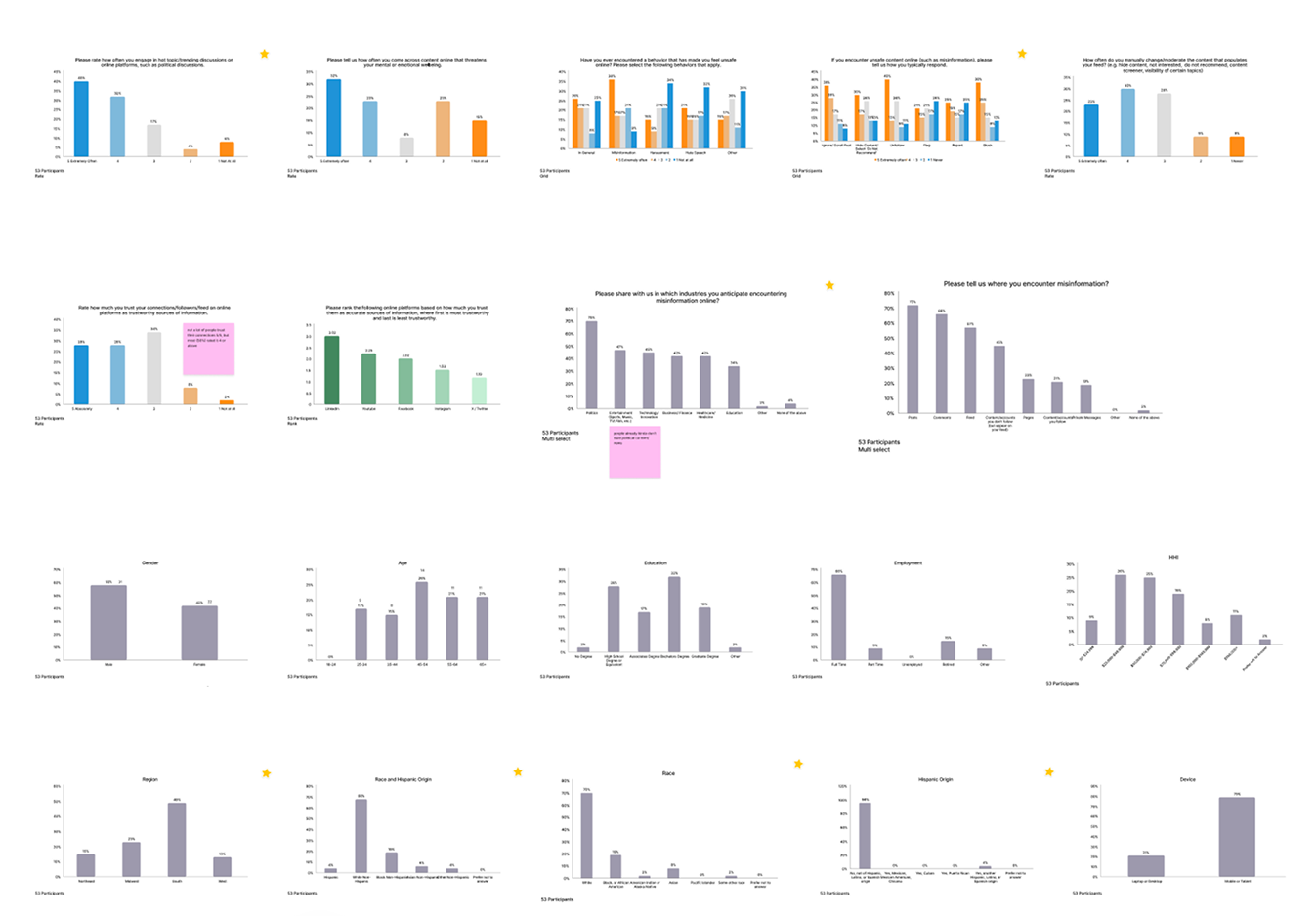

Methods

Survey Participants

identify macro patterns in reporting behavior and trust levels across different content types.

In-depth Interviews

explore how users judge credibility and react to flagged content.

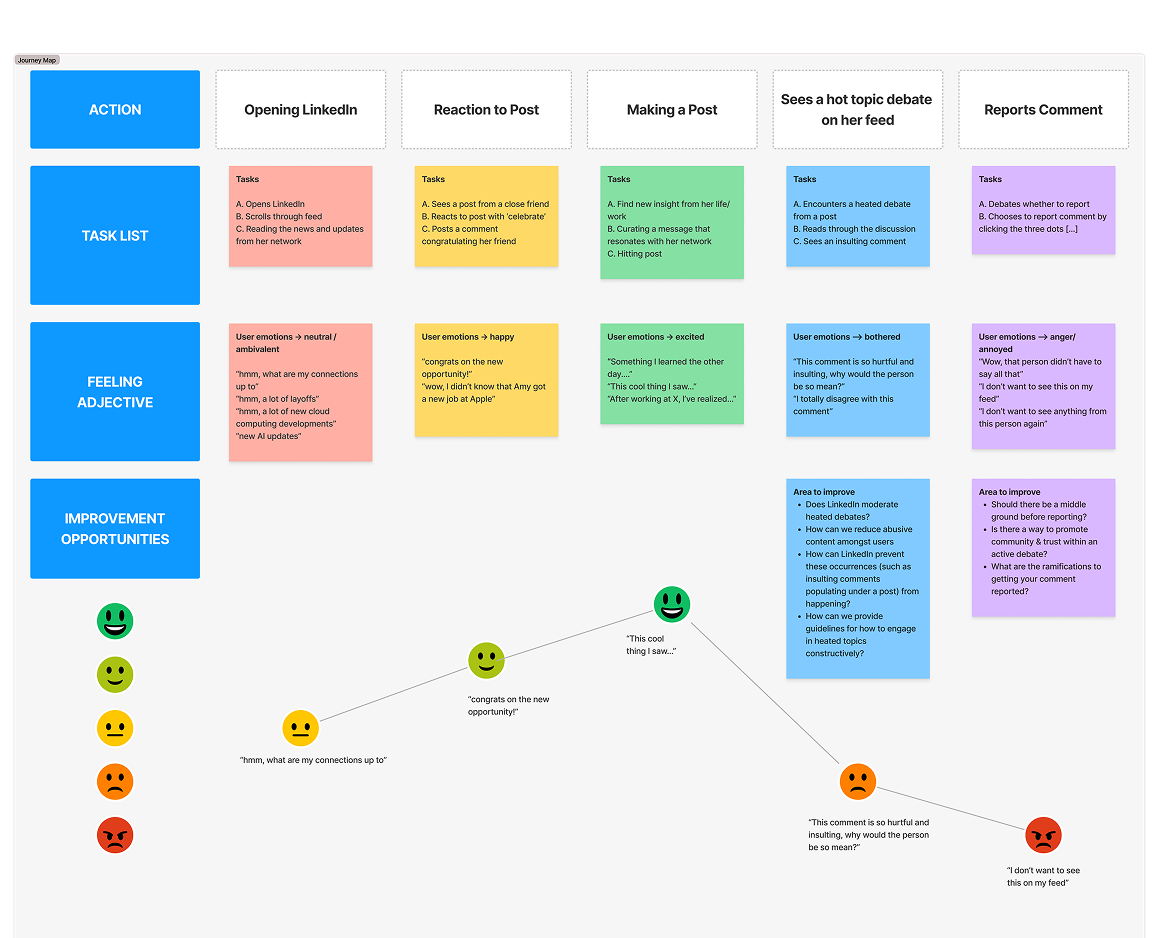

Journey Mapping

visualize the lifecycle of a reported post and identify where users experience friction and loss of trust.

A Dual Perspective

To address misinformation on LinkedIn, we engaged with both the users who encounter content and the creators who publish it.

Key Findings

Users experience a general lack of trust

“With all the misinformation and fake news out there now, I just can’t trust anything I see online anymore.”

Users had difficulty identifying misinformation independently

because social validation from their network outweighed credibility cues.

“It's tough to spot misinformation, when everyone in my network shares the same views.”

Users struggled to trust the flagging system

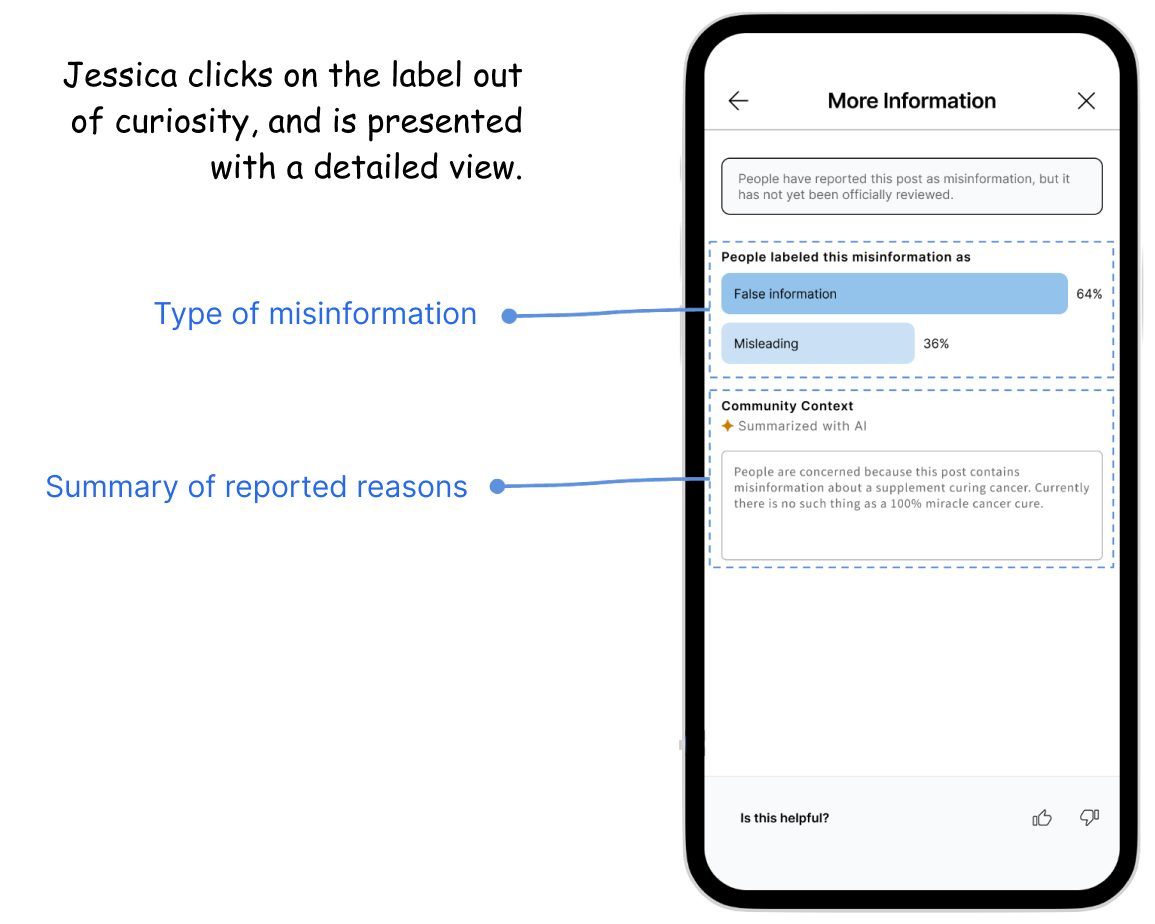

because the platform does not explain why content was labeled as false.

“Even when misinformation is flagged, I’m frustrated because I often don’t even understand why it’s considered false.”

Product Implications

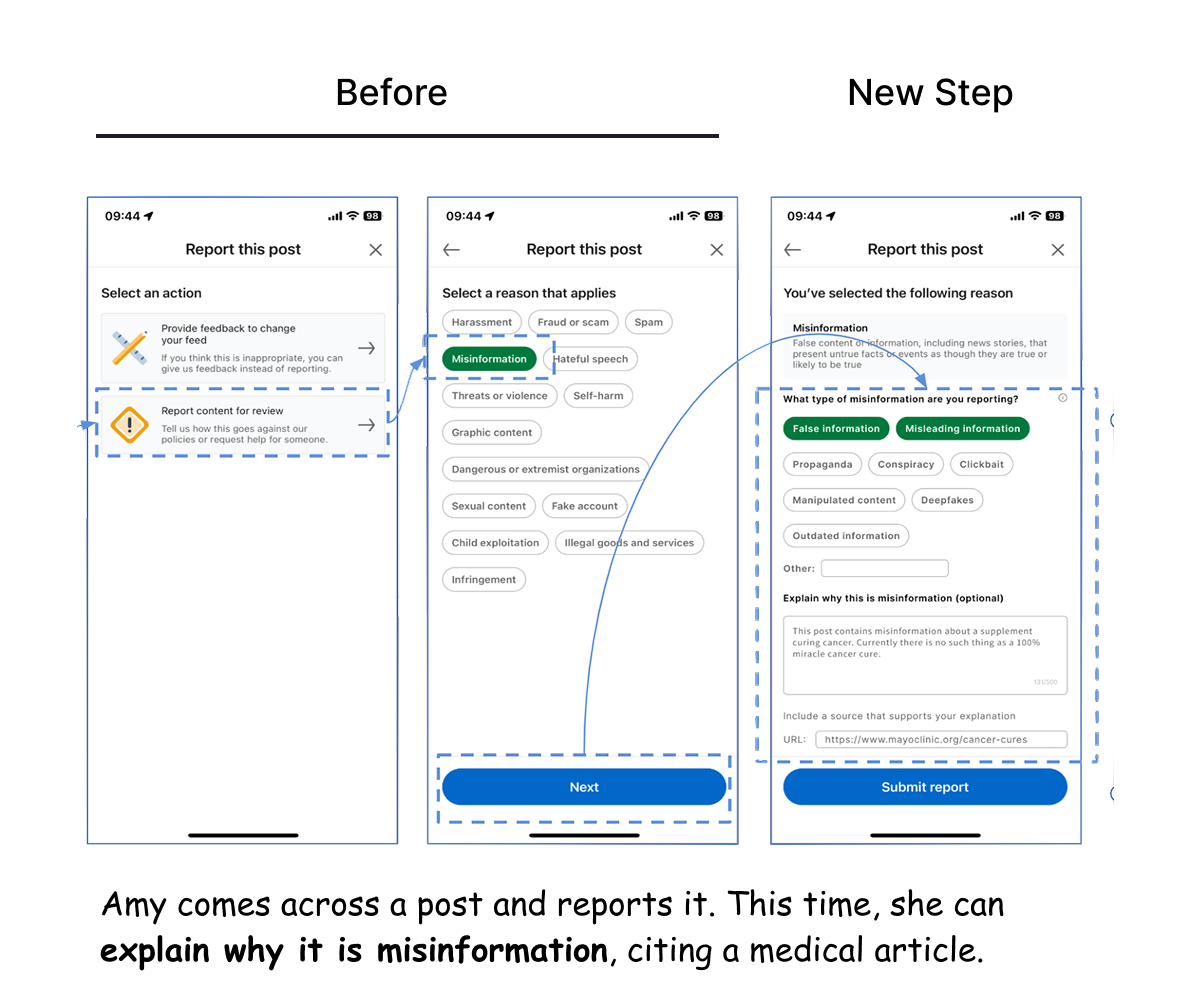

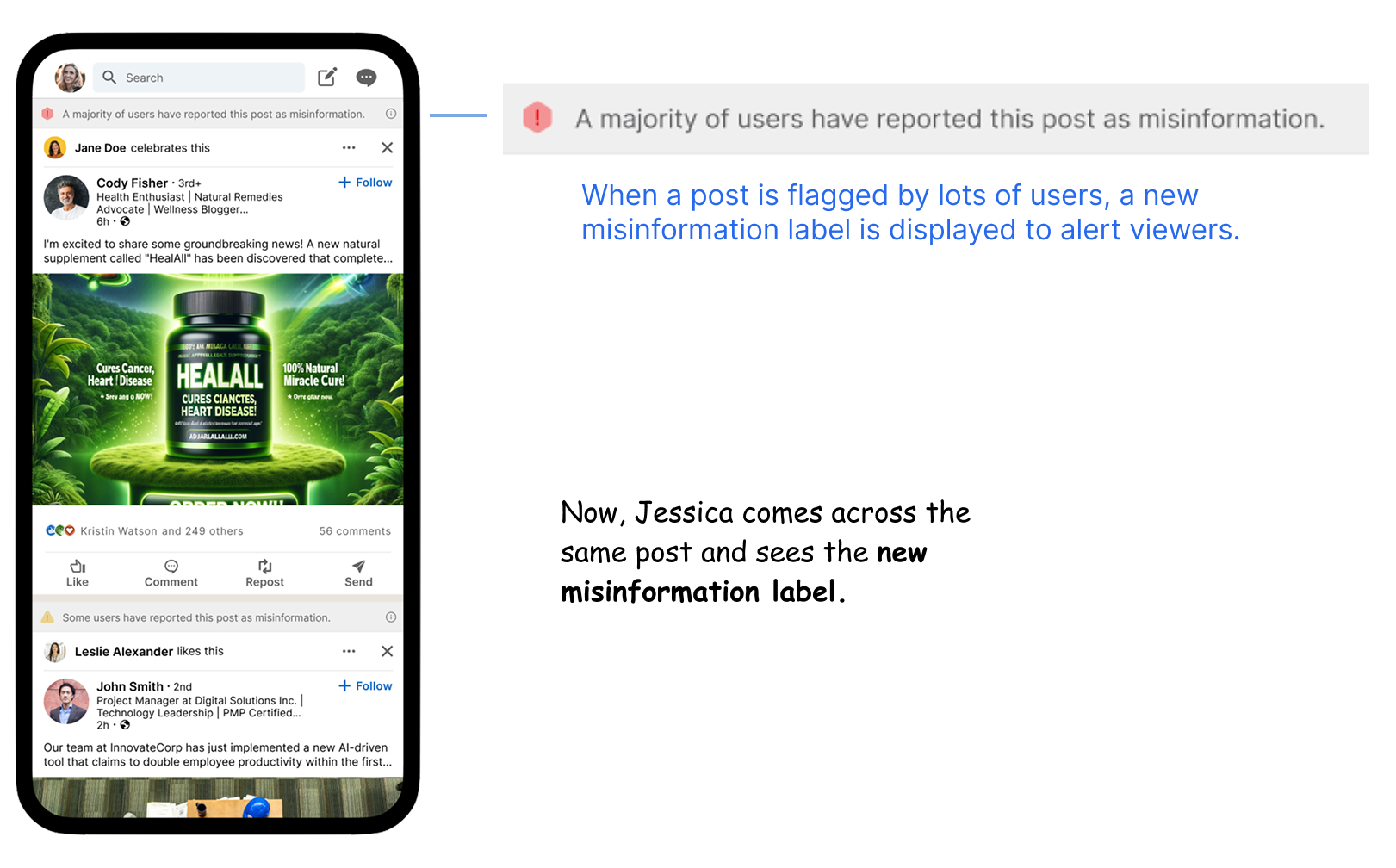

Enhanced Report Context

Intervention Over Absolute Removal

Transparency as Education

Measured Impact

Increase in Platform Trust

say it improves spotting misinformation

find the explanations useful

Now, in those crucial 23 minutes, people will become more aware, identify inaccuracies, and think more critically about content consumption.

Learnings & Reflection

Managing Conflicting Feedback

When two user groups presented conflicting opinions, I prioritized quantitative patterns from our primary target users to ensure the final research implications aligned with overarching business goals.

Learning from the User Journey

Following users through the reporting journey taught me that decision-making is rarely linear and highly context-dependent, and how important journey mapping is for discovering nuanced behaviors.